Confusion matrix guide by dataaspirant.

How to read confusion matrix sklearn.

While sklearn metrics confusion matrix provides a numeric matrix i find it more useful to generate a report using the following.

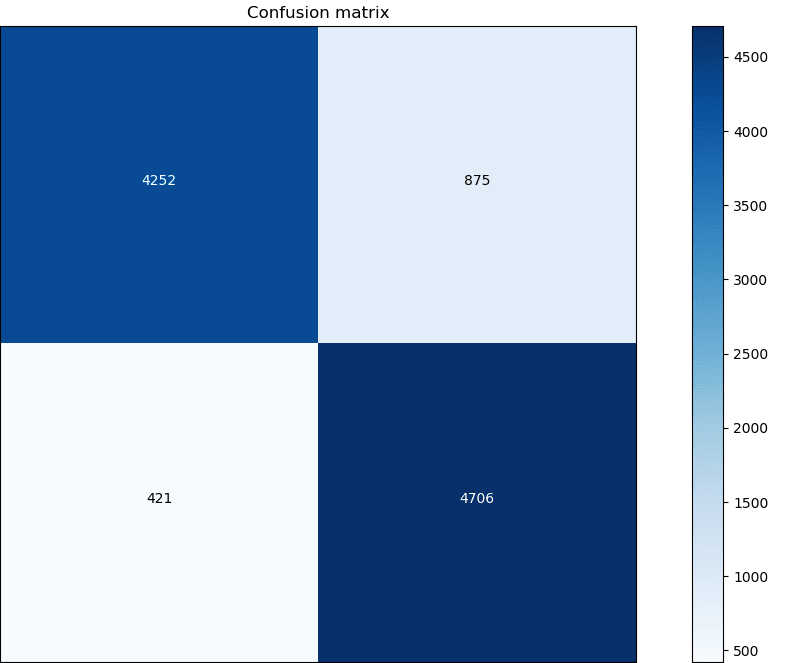

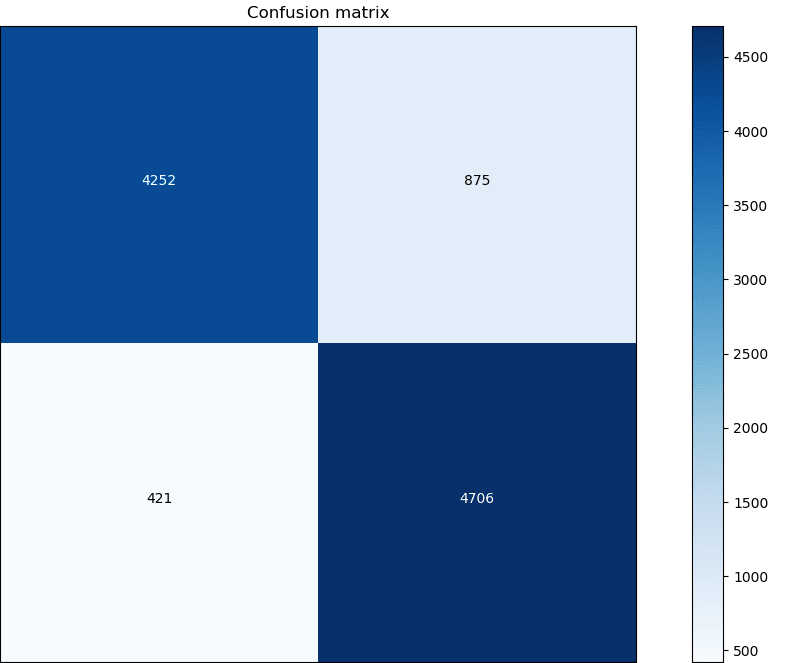

By definition a confusion matrix c is such that c i j is equal to the number of observations known to be in group i and predicted to be in group j.

How many times your read about confusion matrix and after a while forgot about the ture positive false negative.

The diagonal elements represent the number of points for which the predicted label is equal to the true label while off diagonal elements are those that are mislabeled by the classifier.

The main diagonal 64 237 165 gives the correct predictions.

Introduction to confusion matrix in python sklearn.

But after reading this article you will never forget confusion matrix any more.

The confusion matrix is a way of tabulating the number of misclassifications i e the number of predicted classes which ended up in a wrong classification bin based on the true classes.

Sklearn metrics confusion matrix sklearn metrics confusion matrix y true y pred labels none sample weight none normalize none source compute confusion matrix to evaluate the accuracy of a classification.

Etc even you implemented confusion matrix with sklearn or tensorflow still we get confusion about the each componets of the matrix.

For example to know the number of times the classifier confused images of 5s with 3s you would look in the 5th row and 3rd column of the confusion.

The confusion matrix itself is relatively simple to understand but the related terminology can be confusing.

We will also discuss different performance metrics classification accuracy sensitivity specificity recall and f1 read more.

Confusion matrix is used to evaluate the correctness of a classification model.

Coming to confusion matrix it is much detailed representation of what s going on with your labels.

In this blog we will be talking about confusion matrix and its different terminologies.

Simple guide to confusion matrix terminology.

How many times your read about confusion matrix and after a while forgot about the ture positive false negative etc even you implemented confusion matrix with sklearn or tensorflow still we get confusion about the each componets of the matrix.

A much better way to evaluate the performance of a classifier is to look at the confusion matrix.

The general idea is to count the number of times instances of class a are classified as class b.

Example of confusion matrix usage to evaluate the quality of the output of a classifier on the iris data set.

But after reading this article you will never forget confusion matrix any more.

That s where such reports help.

A confusion matrix is a table that is often used to describe the performance of a classification model or classifier on a set of test data for which the true values are known.